Introduction

The primary goal of hand gesture recognition with wearables is to facilitate the realization of gestural user interfaces in mobile and ubiquitous environments. A key challenge in wearable-based hand gesture recognition is the fact that a hand gesture can be performed in several ways, with each consisting of its own configuration of motions and their spatio-temporal dependencies. However, the existing methods generally focus on the characteristics of a single point on hand, but ignores the diversity of motion information over hand skeleton, and as a result, they suffer from two key challenges to characterize hand gestures over multiple wearable sensors: motion representation and motion modeling. This leads us to define a spatio-temporal framework, named STGauntlet, that explicitly characterizes the hand motion context of spatio-temporal relations among multiple bones and detects hand gestures in real-time. In particular, our framework incorporates Lie group-based representation to capture the inherent structural varieties of hand motions with spatio-temporal dependencies among multiple bones. To evaluate our framework, we developed a hand-worn prototype device with multiple motion sensors. Our in-lab study on a dataset collected from nine subjects suggests our approach significantly outperforms the state-of-the-art methods. In particular, we show in-wild examples that highlight the interaction capability of our framework.

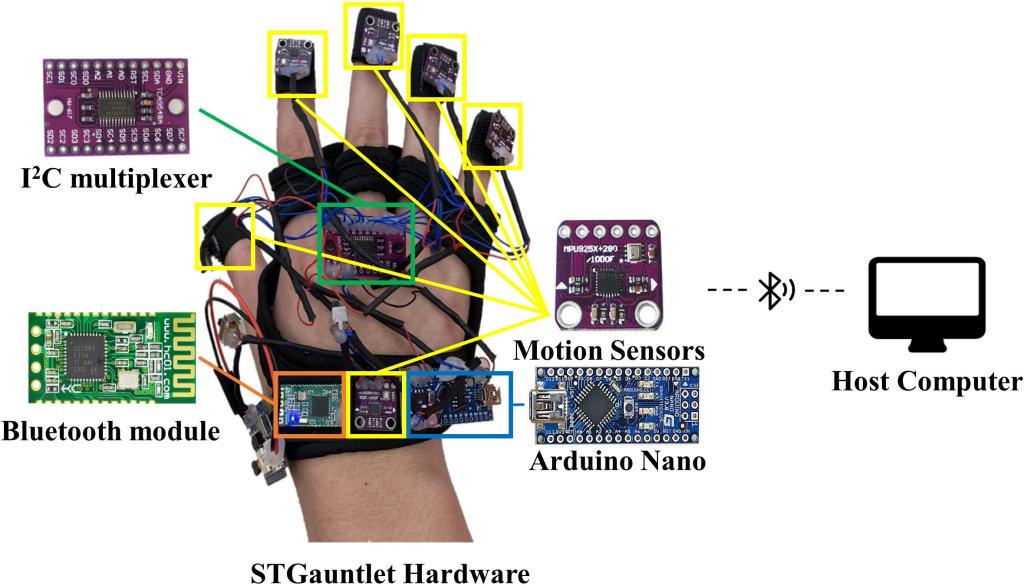

Hardware Apparatus

Example Applications

Motion Sensing Games Adapte

Unmanned Car Remote Controller

Virtual Laboratory

Hand Function Rehabilitation Training Assistant